Difference between revisions of "Selection"

(→What is Model Selection?) |

(→What is Model Selection?) |

||

| Line 31: | Line 31: | ||

<big> <math> y = a_n x^n + a_{n-1} x^{n-1} + \dots + a_1 x + a_0 </math> </big> | <big> <math> y = a_n x^n + a_{n-1} x^{n-1} + \dots + a_1 x + a_0 </math> </big> | ||

| − | For example, a 0th degree polynomial is a constant function, 1st degree is a line, 2nd degree is parabola, etc. If we have m data points a | + | For example, a 0th degree polynomial is a constant function, 1st degree is a line, 2nd degree is parabola, etc. If we have m data points a unique polynomial of degree m-1 will fit exactly (provided no x's are repeated). Some (no longer unique) higher degree polynomials will also fit exactly under the same stipulation. |

| − | So why don't we fit with a high enough polynomial so that there is no error? If we have 100 data points | + | So why don't we fit with a high enough polynomial so that there is no error? If we have 100 data points why not use a 99 degree polynomial? Then there is no errors, no penalties, and zero cost! Perfect fit!? |

| + | |||

| + | There are two reasons you don't want to do that: | ||

| + | |||

| + | * Philosophical: parsimony. The model that generated our data (in both examples above) is a low degree polynomial plus noise. This model can be described with only a few parameters (a,m,b) and NoiseLevel. If our data is generated in the lab, we may never know what the exact model is that created the data. But Occam's razor (a standard paradigm in science) tells us we should pick the simplest model that is consistent with the data. The complexity of a model can be quantified with number of parameters. A degree m polynomial has m+1 parameters. Thus a model consisting of a low degree polynomial with noise is simpler than a model consisting of a high degree polynomial with less noise. Thus a low degree polynomial is preferred unless the data really compel another conclusion. You can fit a line to the nearly parabolic data above, but clearly the data compel a parabola. Model selection makes this comparison precise. When are more parameters justified. | ||

| + | |||

| + | * Practical: overfitting (subject of next section). Fitting models with too many parameters leads to fits that are tuned in a sensitive way to the particular noise that appeared in the data (overfitting). If you run the experiment again, you get different noise. Typically, an overfitted model does a much worse job of predicting the new experiment (with new noise) than a simpler more parsimonious model. Thus model selection has a practical -- and not just philosophical -- purpose. | ||

Revision as of 17:22, 22 April 2009

First, the homework from Lab W.

What is Model Selection?

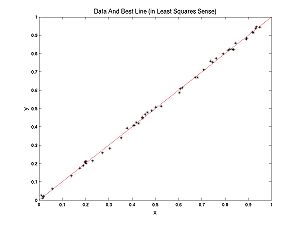

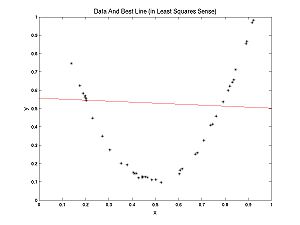

What is model selection? To answer this question let's contrast model selection with parameter estimation. In the Optimization Lab, we fit lines to data. We were doing parameter estimation, trying to estimate the parameters m and b, in the formula for a line y = m*x + b. Recall my examples: we fit a line to nearly linear data and another line to nearly parabolic data.

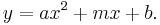

Clearly, in the second case, it would be more appropriate to fit a parabola to the data, rather than a line. How would we do that?

A parabola is described by the formula:  This is a line with a new term,

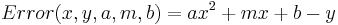

This is a line with a new term,  added. To fit a parabola the procedure is almost the same. First determine the errors in the data:

added. To fit a parabola the procedure is almost the same. First determine the errors in the data:

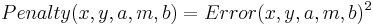

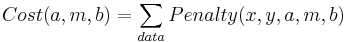

Now define the penalties, (let's be sensible and use LS rather the UG penalties):

And finally the cost function

Now we tweak the three parameters (a,m,b) using, for example, the Nelder-Meade method we studied last week. Of course MATLAB has its own routines for solving this problem based on Linear Algebra that are much better than Nelder-Meade.

What if we fit a parabola to the nearly linear data? Note that if we set a = 0, we get y = m*x + b again. Thus a line is a parabola. But there was some noise in the data, so if you are allowed to tweak the parameter a as well as m and b, you can expect a better (lower cost) fit (you are guaranteed that the fit is no worse). So why should we bother fitting lines to data? Why not just always fit parabolas?

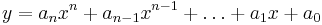

But wait, why stop at parabolas? An nth degree polynomial has the form:

For example, a 0th degree polynomial is a constant function, 1st degree is a line, 2nd degree is parabola, etc. If we have m data points a unique polynomial of degree m-1 will fit exactly (provided no x's are repeated). Some (no longer unique) higher degree polynomials will also fit exactly under the same stipulation.

So why don't we fit with a high enough polynomial so that there is no error? If we have 100 data points why not use a 99 degree polynomial? Then there is no errors, no penalties, and zero cost! Perfect fit!?

There are two reasons you don't want to do that:

- Philosophical: parsimony. The model that generated our data (in both examples above) is a low degree polynomial plus noise. This model can be described with only a few parameters (a,m,b) and NoiseLevel. If our data is generated in the lab, we may never know what the exact model is that created the data. But Occam's razor (a standard paradigm in science) tells us we should pick the simplest model that is consistent with the data. The complexity of a model can be quantified with number of parameters. A degree m polynomial has m+1 parameters. Thus a model consisting of a low degree polynomial with noise is simpler than a model consisting of a high degree polynomial with less noise. Thus a low degree polynomial is preferred unless the data really compel another conclusion. You can fit a line to the nearly parabolic data above, but clearly the data compel a parabola. Model selection makes this comparison precise. When are more parameters justified.

- Practical: overfitting (subject of next section). Fitting models with too many parameters leads to fits that are tuned in a sensitive way to the particular noise that appeared in the data (overfitting). If you run the experiment again, you get different noise. Typically, an overfitted model does a much worse job of predicting the new experiment (with new noise) than a simpler more parsimonious model. Thus model selection has a practical -- and not just philosophical -- purpose.